What If Machines Could Learn the Way Children Do?

A good number of us shout at our laptops when they misbehave, often to no avail. Perhaps soon they will listen. Could we one day teach them—much like we do children or pets—how to behave?

For the majority of human history, we have survived and flourished based on our ability to learn. Today’s machines learn—Siri perks up at the sound of your voice, traffic lights react to the flux of cars—but only in limited ways. They can respond to direct instructions, such as specific inputs and commands, they can be programmed to recognize patterns, and, in some instances, they can learn for themselves. But our machines may have the potential to become the robotic equivalent of hunter-gatherer children, who are arguably unrivaled in the full range of activities they undertake to learn to engage with their worlds. (Check out this video.)

It may seem odd to juxtapose the lives of hunter-gatherer children—who quickly become adept at bringing in berries, figs, tubers, and even small game such as hyrax and galagos (in parts of Africa, for example), sharing their haul with family and friends, and helping to raise their siblings—with the comparatively flat existence of machines that have no culture to speak of, and little personality to boot. While forager children are taught an array of skills, machines merely need to remain on our good side so we do not switch them off (or throw them out a window). But what if we could develop machines that learn not only through direct instruction but also through a lot of imitation and play, as hunter-gatherer children do?

Machines that are more humanlike learners could prove powerful allies in solving some of our most pressing problems.

For the time being, most machine learning simply involves cajoling algorithms in the right direction so that a computer can, say, figure out whether a scan shows signs of cancer or how many people are likely to click on an ad. This sort of training is accomplished through experts who themselves have been taught the requisite technical wizardry.

Machines can also teach themselves to get better at certain tasks through what is known as “reinforcement learning.” In this type of learning, the machine tries to solve a problem or win a game by learning the most rewarding actions needed to achieve the desired end, even though it does not necessarily know the long-term ramifications of any particular action.

For a concrete example, let’s look at chess. This game was difficult for computers to play because there are a greater number of possible chess moves in a game than there are atoms in the observable universe. So a computer could not—within a reasonable timeframe—work through all the possibilities and pick the moves most likely to win. Through reinforcement learning, a computer can learn to balance short-term rewards, such as capturing an opponent’s queen but ultimately exposing itself to more risk, with long-term rewards—that is, winning the game.

Reinforcement learning was the approach used by Google’s DeepMind to create AlphaGo, the computer program that defeated top professional Go players, and AlphaGo Zero, a program that mastered the board game by teaching itself before changing tack to become an expert chess player in less than four hours.

While machine learning includes many powerful techniques, computers are still limited in their ability to solve problems across domains. Although DeepMind’s programs are good at strategic board games, they cannot easily turn to other tasks such as diagnosing illnesses or planning a holiday. Humans, on the other hand, are wonderfully flexible and inventive learners.

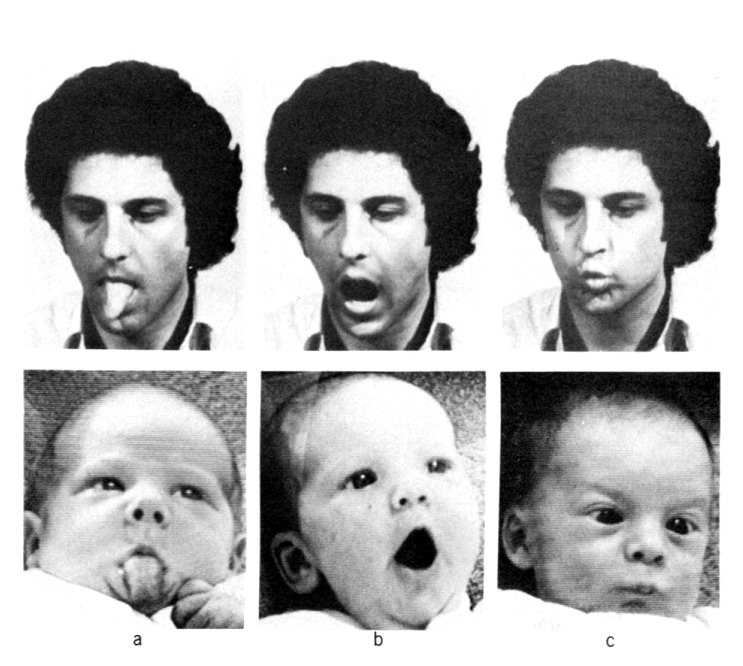

Direct instruction—what many of us think of as “teaching” and the way we currently teach machines—is far from the only form of teaching or learning. The value of learning through firsthand experience is evident across cultures. Indeed, anthropological studies of learning in smaller-scale societies, such as those of foragers, could show us ways to build smarter machines. According to a review of ethnographies conducted by psychologist Sheina Lew-Levy and and her team, which included an archaeologist and two anthropologists, imitation is an important aspect of learning. Children even overimitate: In performing a task, they copy the relevant and irrelevant steps demonstrated by a capable adult. (Imagine if your yoga instructor ululated while shifting from the downward dog position to half lord of the fishes; the wailing would be an example of an irrelevant step but one that you would likely copy anyway.)

Adults tend not to hide their activities away from children in forager societies, giving ample opportunity for youngsters to learn through imitation. Imitating all steps faithfully, even the seemingly pointless ones, could help preserve complex aspects of culture across generations, although the meanings of certain steps might not be entirely apparent to the people performing them. Overimitation is not just common among forager children, though: Young children in Brisbane, Australia, overimitate as much as the children of Aboriginal Australians and San foragers in Botswana and South Africa. And in the Central African Republic, forager children in the Aka community overimitated less than neighboring Ngandu farmer children.

Across a wide and diverse range of forager groups, children also learn by playing house. They build small huts with fire pits and pretend to dig for roots and hunt, with some children playing the hunted animals. Anthropologist Danny Naveh has argued that in the case of Nayaka children who dwell in the forests of South India, playing “animal” helps them develop a compassionate stance wherein they learn to see the animals as other “persons” with whom they share a world.

Anthropology shows us the many routes toward becoming human; it might also help us develop more humanlike artificial intelligences. Machines able to learn through imitation or through exploring and playing in the world could prove to be powerful problem-solvers. Building flexible and capable learners could allow us to engage with more creative machines that are able to tackle ever more complex issues.

One day we might even be able to teach a computer as we teach a child. The diversity in teaching and learning techniques exhibited by humans around the world and outside of the classroom—as shown through studies of forager children—might be unfamiliar to many involved in machine learning and artificial intelligence research today. But awareness of this diversity might yield new insights for how we could design machines to be more humanlike learners, capable of being taught by many human teachers, not merely skilled programmers.