Requiem for a War Robot

Excerpted from War Virtually: The Quest to Automate Conflict, Militarize Data, and Predict the Future by Roberto J. González, published by the University of California Press. © 2022 by Roberto J. González.

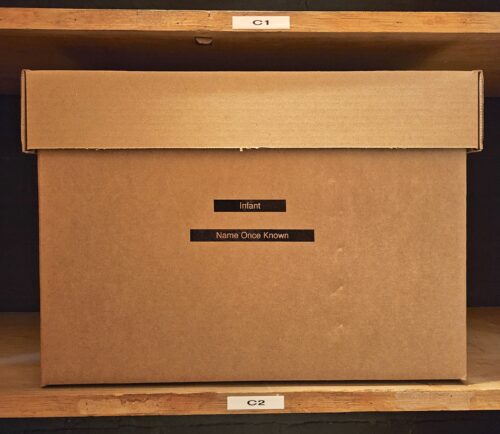

The blistering late afternoon wind ripped across Camp Taji, a sprawling U.S. military base north of Baghdad in an area known as the Sunni Triangle. In a desolate corner of the outpost, where the feared Iraqi Republican Guard once manufactured mustard gas, nerve agents, and other chemical weapons, a group of American soldiers and Marines solemnly gathered around an open grave, dripping sweat in the 114-degree heat. They were paying their final respects to Boomer, a fallen comrade who had been an indispensable team member for years. Days earlier, he had been literally blown apart by a roadside bomb.

As a bugle mournfully sounded the last few notes of “Taps,” a soldier raised his rifle and fired a long series of volleys—a 21-gun salute. In 2013, the troops, which included members of an elite Army unit specializing in explosive ordnance disposal (EOD), had decorated Boomer posthumously with a Bronze Star and Purple Heart. With help from human operators, the diminutive remote-controlled robot had protected thousands of troops from harm by finding and disarming hidden explosives.

Boomer was a Multi-function Agile Remote-Controlled robot, or MARCbot, manufactured by Exponent, a small Silicon Valley firm. Weighing just over 30 pounds, MARCbots look like a cross between a Hollywood camera dolly and an oversized Tonka truck.

Despite their toy-like appearance, the devices leave a lasting impression. In an online discussion on Reddit about EOD support robots, a soldier wrote, “Those little bastards can develop a personality, and they save so many lives.”

An infantryman responded, “We liked those EOD robots. I can’t blame you for giving your guy a proper burial, he helped keep a lot of people safe and did a job that most people wouldn’t want to do.” Some EOD team members have written letters to companies that manufacture the robots, describing their bravery and willingness to sacrifice themselves.

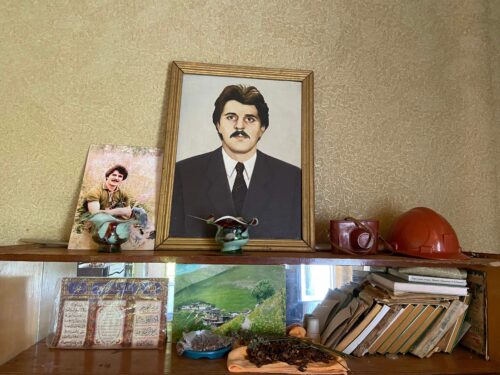

Some warfighters even personalized their droids with “body” art. One Marine who was stationed in Iraq told me about the close bond that developed between an especially resilient “Johnny 5”—a nickname given to a type of robot—and the members of his EOD unit: “One particular Johnny 5 had seen so much shit and survived so many IEDs [improvised explosive devices], that they began tattooing him with Sharpies,” he explained. “Story has it that when Johnny finally met his match, each team member took home a tattooed body part.”

But while some EOD teams established emotional bonds with their robots, others loathed them, especially if they malfunctioned. “My team once had a robot that was obnoxious,” another Marine who served in Iraq told me. “It would frequently accelerate for no reason, steer whichever way it wanted, stop, et cetera. This often resulted in this stupid thing driving itself into a ditch right next to a suspected IED.” Eventually, the team sent the robot on a suicide mission when they encountered an IED in the field: “We drove him straight over the pressure plate, and blew the stupid bastard to pieces.”

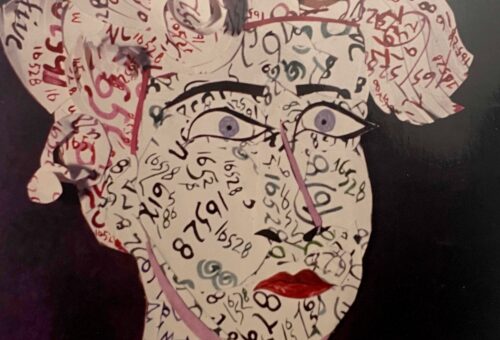

At first glance, there’s something odd about battle-hardened warriors treating remote-controlled devices like either brave, loyal pets or clumsy, stubborn clods—but we shouldn’t be surprised. People in many regions have anthropomorphized tools and machines, assigning them human traits and characteristics. For generations, Melanesian islanders have christened canoes with humorous nicknames to recognize their idiosyncrasies. In India, Guatemala, and other countries, bus drivers name their vehicles, protect them with deities’ images, and “dress” them in exuberant colors. Throughout the 20th century, British, German, French, and Russian troops talked about tanks, airplanes, and ships as if they were people. And in Japan, robots’ roles have rapidly expanded into the intimate spaces of home—an extension of what anthropologists call “techno-animism.”

Some observers interpret these accounts as unsettling glimpses into a future in which men and women are as likely to empathize with artificially intelligent machines as with members of their own species. From this perspective, what makes robot funerals unnerving is the idea of an emotional slippery slope. If soldiers are bonding with clunky pieces of remote-controlled hardware, what are the prospects of humans forming emotional attachments with machines once they’re more autonomous in nature, nuanced in behavior, and anthropoid in form?

And then, there’s a more troubling question: On the battlefield, will Homo sapiens be capable of dehumanizing members of its own species (as it has for centuries), even as it simultaneously humanizes the robots sent to kill them?

As an anthropologist exploring the world of virtual warfare, I’ve been struck by the unbridled enthusiasm that many scientists, policymakers, and journalists have for data-driven weapon systems. For the past decade, U.S. defense contractors and influential Pentagon officials have relentlessly promoted robotic technologies, promising a future in which humans and automatons will form combat teams. President Joe Biden’s embrace of “over-the-horizon capabilities” that “can strike terrorists and targets without American boots on the ground” is a signal that the current administration will continue waging war, virtually, across the globe.

These transformations aren’t inevitable, but they’re becoming a self-fulfilling prophecy. The New York Times notes, “Almost unnoticed outside defense circles, the Pentagon has put artificial intelligence at the center of its strategy to maintain the United States’ position as the world’s dominant military power.” And the Financial Times reports that “the advance of artificial intelligence brings with it the prospect of robot-soldiers battling alongside humans—and one day eclipsing them altogether.”

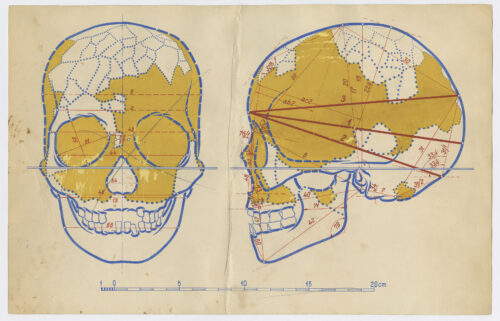

But what exactly is a “robot-soldier”? Is it a remote-controlled armor-clad box on wheels, reliant upon explicit, continuous human commands for direction? Is it a device that can be activated and left to operate semi-autonomously, with limited human oversight or intervention? Is it a droid capable of selecting targets (using facial recognition software or other forms of artificial intelligence) and initiating attacks without human involvement? Is it a “centaur” warfighter—a seamlessly integrated human-machine team that optimizes battlefield performance? Hundreds of possible technological configurations lie between remote control and full autonomy.

The U.S. military’s robotic and autonomous systems include a vast array of artifacts that rely on remote control or artificial intelligence: bulbous-nosed aerial drones; boxy, tank-like vehicles; sleek warships and submarines; automated missiles; and robots of various shapes and sizes—bipedal androids, insectile “swarming” machines, quadrupedal gadgets that trot like dogs or mules, and streamlined aquatic devices resembling fish, mollusks, or crustaceans.

These various arrangements imply different kinds of relationships and interactions between people and mechanical objects, as well as new ethical questions about who bears responsibility for a robot’s actions.

Anthropologist Lucy Suchman succinctly frames the issue as a question about human agency in the face of increasing reliance on technological systems. She points to an emerging paradox in which soldiers’ “bodies become increasingly entangled with machines, in the interest of keeping them apart from the bodies of others”—such as suspected enemies and civilians living under military occupation, for example. As the human-machine interface ties soldiers and robots more closely together, it physically separates human warfighters from foreign others.

The consequences are ironic, potentially deadly. Sometimes, robotic and autonomous systems seem designed to ensure that “our” warriors should never see, hear, or get close to enemy others, much less understand them—as if their thoughts and motivations are infectious or contagious. Such taboos and magical thinking also underlie much of the military’s behavioral modeling software, which is often cloaked in the high-tech mystique of artificial intelligence, big data, and predictive analytics.

The military’s push toward autonomous systems is linked to the growing interconnection between humans and digitally networked technologies, a process that began in the late 20th century, then gained greater momentum in the early 2000s. Archaeologist Ian Hodder argues that high-tech entanglements might be described as forms of entrapment:

“We use terms such as ‘air’ book, the ‘cloud,’ the ‘Web,’ all of which terms seem light and insubstantial, even though they describe technologies based on buildings full of wires, enormous use of energy, cheap labor, and toxic production and recycling processes. … It would be difficult to give up smartphones and big data; there is already too much invested, too much at stake. The things seem to have taken us over … [and] our relationship with digital things has become asymmetrical.”

The Defense Department’s quest to automate and autonomize the battlefield is part of these larger material and cultural environments.

Defense establishment elites from public, private, and nonprofit sectors repeat pro-roboticization rhetoric over and over again: The machines will keep American troops safe because they can perform dull, dirty, dangerous tasks; they will result in fewer civilian casualties since robots will be able to identify enemies with greater precision than humans; they’ll be cost-effective and efficient, allowing more to get done with less; and the devices will allow the U.S. to stay ahead of China, which, according to some experts, will soon surpass America’s technological capabilities.

The evidence supporting these assertions is questionable at best, and sometimes demonstrably false—for example, an “unmanned” aerial Predator drone requires at least three human controllers: a pilot, a sensor operator, and a mission intelligence coordinator. Yet the Pentagon’s propagandists and pundits echo the talking points, and over time many people take them for granted as fact.

Perhaps the most compelling rhetorical argument is autonomy’s apparent inevitability. Pentagon officials need only point to the fact that automobile manufacturers and Silicon Valley firms are developing and testing self-driving cars on America’s streets and highways. The momentum and hype favor rapid acceptance of these technologies, even if many questions remain about their safety.

Given the circumstances, why not just stop worrying and learn to love the robots?

This excerpt has been edited slightly for length, style, and clarity.