Learning to Trust Machines That Learn

Imagine lying on a hospital bed. Doctors with grave expressions hover. One leans down to tell you that you are terribly sick and says they recommend a risky procedure as your best hope. You ask them to explain what’s going on. They cannot. Your trust in the doctors ebbs away.

Replace the doctors with a computer program and you more or less have the state of artificial intelligence (AI) today. Technology increasingly insinuates itself into our lives, affecting the decisions we make and the decisions others make for us. More and more, we give computers responsibility and autonomy to decide on life-changing events. If your hospital bed happens to be in the Memorial Sloan Kettering Cancer Center, for example, your oncologist might have asked IBM’s Watson for advice. Watson is a computer system that can answer questions posed in everyday language, most famously using its skills to win the TV quiz show Jeopardy! in 2011.

The problem is, Watson cannot tell you why it decided you have cancer. Machines are currently incapable of explaining their decisions. And as J.K. Rowling wrote in Harry Potter and the Chamber of Secrets, “Never trust anything that can think for itself if you can’t see where it keeps its brain.”

Some AI researchers are attempting to address this by creating programs that can essentially keep an eye on their own decision-making processes and describe how they figured things out (known as “explainable AI”). But there is a deeper question: Is explainable AI important, or is it merely a remedy for our own discomfort?

We must choose to trust or distrust the machines. Just as we learn to trust other humans (or not), this is likely to be a convoluted, multifaceted, and at times fraught process. Trust emerges not only from the outcomes of any given interaction but also through expertise, context, experience, and emotions.

Anthropologists interested in the scientific study of trust have embraced game theory for this very purpose. It all started, appropriately enough, with computers.

In game theory, situations in which you are affected by the behavior of others are known as games. Game theory gives us a way of calculating how these social interactions might proceed; we do so by looking at how an individual following a particular strategy—rules of conduct in certain circumstances—fares depending on the strategies of others. In the early 1980s, political scientist Robert Axelrod hosted a tournament of games in his computer to explore which strategies won out in the long run. He found that a copycat, tit-for-tat strategy of initially being cooperative then simply copying your social partner’s behavior did best, even against utterly selfish strategies.

In an effort to unravel human behavior, social scientists took these abstract mathematical games into the lab. They looked at how people, typically students, responded to incentives such as money, course credit, or pizza. Students, though, are not very representative compared to the majority of humans: They tend to be WEIRD, or westernized, educated, industrialized, rich, and democratic. So anthropologists took these games into the field.

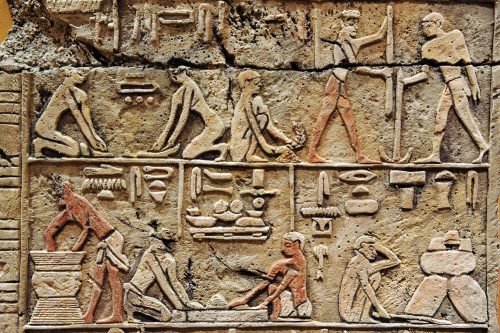

By adopting game theory across the world, anthropologists have been able to trace and quantify the great diversity and commonalities in human social behaviors, including trust.

Over a decade ago, Lee Cronk, an anthropologist at Rutgers University, played a trust game with Maasai people in Kenya. The trust game involves a give-and-take interaction between two players. Both are given an amount of money by the experimenter, so they both start off on an equal footing. Player one decides how much of her endowment to give to the other player; the experimenter then multiplies the amount given by the first player (for example, tripling it) and the second player can choose to return a portion to player one, signaling trust. In a cold and economically rational world of perfect self-interest, neither player would share anything.

Cronk found that most of the Maasai players gave around half of their endowments to their social partner, who returned slightly less but still more than nothing. However, something interesting happened when Cronk told everyone they were playing an osotua game. In the Maa language osotua literally means “umbilical cord,” but the concept describes peaceful relationships between people, allowing them to ask for gifts or favors.

In an osotua relationship, you should only give to people in need and only if you have the means to do so. When the game was framed in this way, the Maasai participants gave less of their money and returned less. What looked like a breakdown in trust turned out to be observance of an important social norm. This study and others like it have consistently shown that familiarity and local norms are important aspects of good relations, and that trust takes many forms across the world.

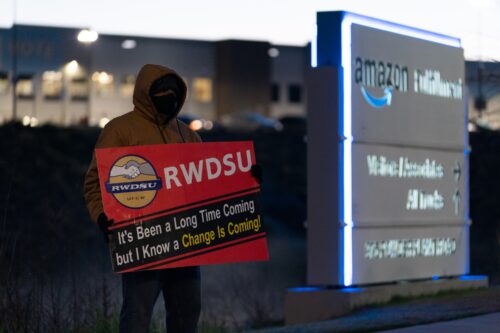

Technology journalist Will Knight wrote, “Just as society is built upon a contract of expected behavior, we will need to design AI systems to respect and fit with our social norms.” But by whose norms should the systems abide? Implicit is the sense that the norms will be those of the developers and pundits. As is too often the case, there is a Western-centric bias at play here.

Some social scientists think of trust as the extent to which one individual’s interests encapsulate the interests of others. But as things stand, machines don’t have interests—in the sense that they cannot care about themselves, their families or friends, their status, wealth, or reproduction. For a machine to develop interests, let alone be capable of understanding and explaining them, does it require self-reflection? Consciousness?

When we (or, more pertinently, they) develop artificial consciousness, then we are beyond simple issues of our own comfort, familiarity, and trust. In a realm where machines evolve imagination, ingenuity and desire, they are no longer our tools; they become, perhaps, trustworthy and capable of trust themselves.

In such a future, looking up from your imagined hospital bed, the robotic doctor tending to you should be able to explain why you are sick and why she prescribes a particular course of treatment. She would begin to seem ever more human, and it would be possible for you to put your trust in her, just as you might trust any flesh-and-blood doctor.